Limits are fundamental concepts in calculus that help us understand the behavior of functions as they approach a specific value. When we talk about limits, we are interested in what a function is doing around a certain value of x, rather than at that exact point. This can be visualized through graphs or analyzed using tables of values.

The notation for limits is typically expressed as \(\lim_{{x \to c}} f(x)\), which reads as "the limit of f of x as x approaches c." For example, to find the limit of the function \(f(x) = x^2\) as \(x\) approaches 2, we examine the y-values that \(f(x)\) approaches as \(x\) gets very close to 2. This means we consider values like 1.99 and 2.01, which yield results of 3.96 and 4.04, respectively. Both sides approach a y-value of 4, leading us to conclude that \(\lim_{{x \to 2}} x^2 = 4\).

Another example involves the function \(f(x) = x + 4\) as \(x\) approaches 1. By evaluating values close to 1, such as 0.99 and 1.01, we find that both sides approach a y-value of 5. Thus, \(\lim_{{x \to 1}} (x + 4) = 5\). It's important to note that while plugging in the value of c directly into the function can yield the limit, this is not always reliable. There are cases where the limit does not equal the function value at that point.

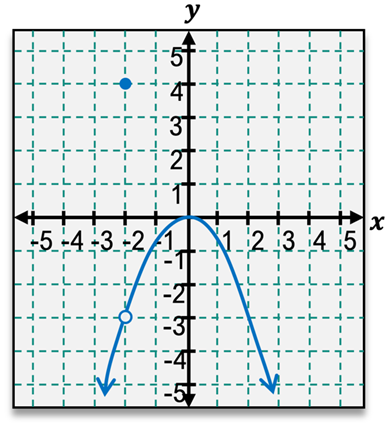

For instance, when examining a different function as \(x\) approaches 3, we may find that the limit is 1 based on the graph, even if the function value at \(x = 3\) is 4. This discrepancy highlights the necessity of focusing on the behavior of the function around the point rather than the value at the point itself. Therefore, understanding limits requires careful analysis of the function's approach to a given value, ensuring we capture the correct behavior of the function.