Linear regression is a statistical method used to model the relationship between a dependent variable and one or more independent variables. The least squares method is a common technique employed to find the line of best fit, which minimizes the sum of the squared residuals. A residual is defined as the vertical distance between an observed data point and the predicted value on the regression line, represented mathematically as:

\[d = y - \hat{y}\]

where \(d\) is the residual, \(y\) is the actual observed value, and \(\hat{y}\) is the predicted value from the regression equation.

To calculate residuals, one must first determine the predicted values (\(\hat{y}\)) using the regression equation. For example, if the regression equation is given as:

\[\hat{y} = 284x - 16101\]

then for a specific \(x\) value, you substitute \(x\) into the equation to find \(\hat{y}\). The residual is then calculated by subtracting the predicted value from the actual value. Residuals can be positive or negative, indicating whether the actual value is above or below the predicted value.

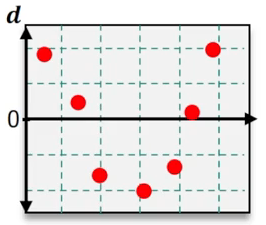

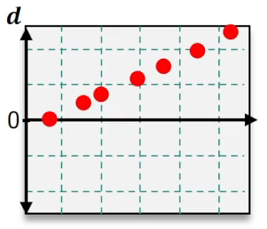

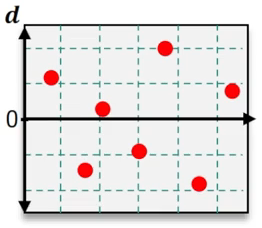

Once all residuals are calculated, they can be visualized using a residual plot, where the x-axis represents the original data values and the y-axis represents the residuals. This plot helps assess the fit of the regression model. A good fit is indicated by a random scatter of residuals around the horizontal axis, suggesting no discernible pattern. Conversely, if the residuals display a systematic pattern, such as oscillation or divergence, it indicates that the linear model may not be appropriate for the data.

In summary, analyzing residuals and their plots is crucial for evaluating the effectiveness of a linear regression model. A random distribution of residuals supports the model's validity, while patterns in the residuals suggest the need for alternative modeling approaches.