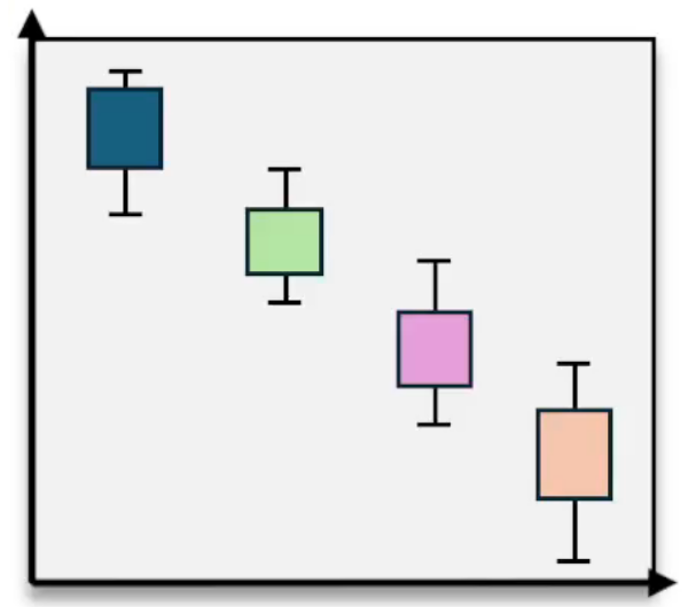

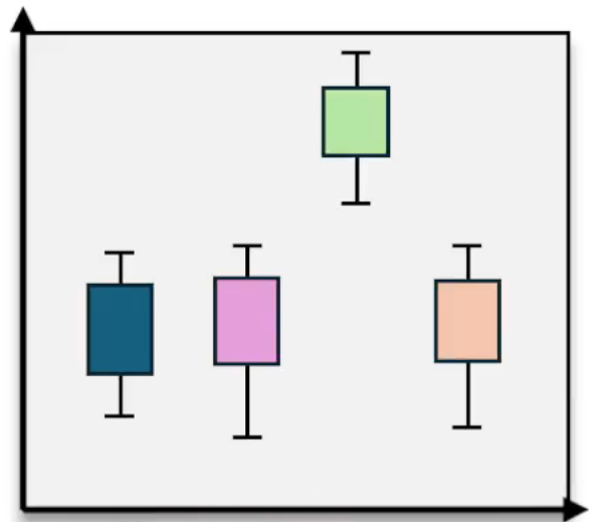

When conducting a one-way ANOVA test, rejecting the null hypothesis indicates that at least one group mean differs from the others. However, this result does not specify which means are different. To identify the specific pairs of means that differ, a follow-up procedure called a post hoc test is used. One common post hoc test is the Bonferroni test, which compares pairs of group means while controlling for the increased risk of Type I error due to multiple comparisons.

The Bonferroni test works by breaking down the multiple group means into all possible pairs and performing individual two-sample t-tests on each pair. For example, if there are three groups (such as grades 10, 11, and 12), the pairs tested would be (10 vs. 11), (11 vs. 12), and (10 vs. 12). Each pair is tested with the null hypothesis that the two means are equal, and the alternative hypothesis that they are not equal, making it a two-tailed test.

Key values needed for the Bonferroni test come from the ANOVA output, including the Mean Square Error (MSE), which represents the variance within groups. This MSE is used as the estimate of variance in the t-test calculations. Other important parameters include the total sample size (N), the number of groups (k), and the degrees of freedom for error, calculated as \(df = N - k\).

The test statistic for each pairwise comparison is calculated using the formula:

\[t = \frac{\bar{x}_1 - \bar{x}_2}{\sqrt{MSE \left(\frac{1}{n_1} + \frac{1}{n_2}\right)}}\]where \(\bar{x}_1\) and \(\bar{x}_2\) are the sample means of the two groups, \(n_1\) and \(n_2\) are their respective sample sizes, and \(MSE\) is the mean square error from the ANOVA.

After calculating the t-statistic, the corresponding p-value is found using the t-distribution with the appropriate degrees of freedom. Since the test is two-tailed, the p-value is doubled. However, because multiple pairwise tests are conducted, the Bonferroni correction adjusts for the increased chance of false positives by multiplying each p-value by the number of comparisons (pairs). This adjustment ensures the overall Type I error rate remains at the chosen significance level, typically \(\alpha = 0.05\).

The number of pairs can be calculated using combinations: for \(k\) groups, the number of pairs is given by the binomial coefficient:

\[\binom{k}{2} = \frac{k(k-1)}{2}\]For example, with three groups, there are three pairs.

Once the adjusted p-values are obtained, each is compared to the significance level \(\alpha\). If the adjusted p-value is less than \(\alpha\), the null hypothesis for that pair is rejected, indicating a statistically significant difference between those two group means. If the adjusted p-value is greater than \(\alpha\), there is insufficient evidence to conclude a difference.

In practice, the Bonferroni test can be tedious due to multiple calculations, but it provides a rigorous method to pinpoint which specific group means differ after an overall ANOVA indicates a difference exists. This approach helps maintain control over Type I error rates while allowing detailed pairwise comparisons.