Two-way ANOVA is a statistical method used to compare the means of a dependent variable across two different factors simultaneously. Unlike one-way ANOVA, which examines differences across one factor, two-way ANOVA evaluates how two independent variables influence the outcome and whether there is an interaction effect between them. For example, in studying plant growth, factors such as sunlight exposure (low, medium, high) and fertilizer level (one or three tablespoons per week) can both impact growth, and two-way ANOVA helps analyze these effects together.

In two-way ANOVA, the dependent variable is measured across all combinations of the two factors, often arranged in a data table with one factor along the rows and the other along the columns. The analysis involves testing three hypotheses: first, whether there is an interaction effect between the two factors; second, whether the first factor alone has a significant effect; and third, whether the second factor alone has a significant effect.

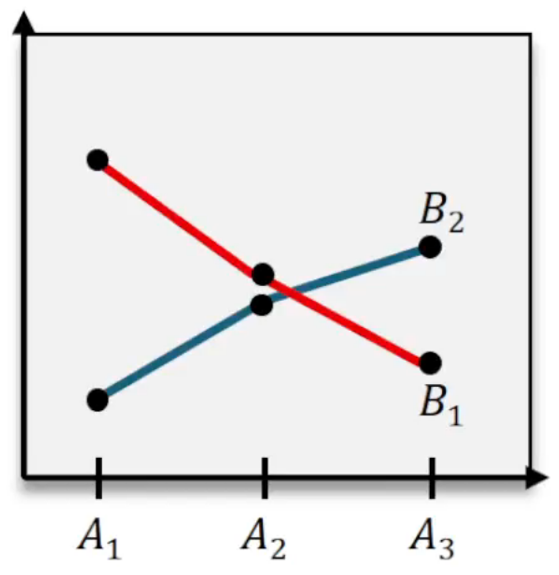

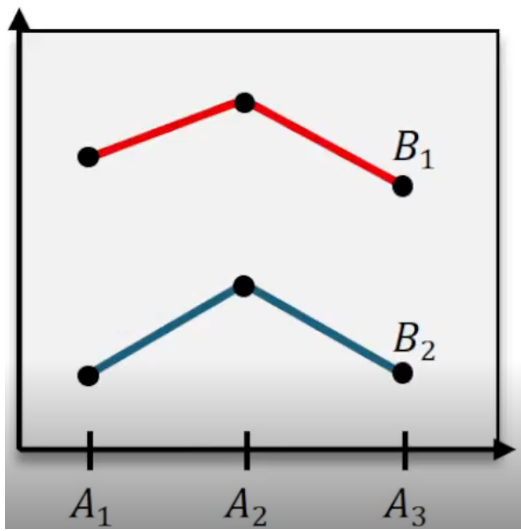

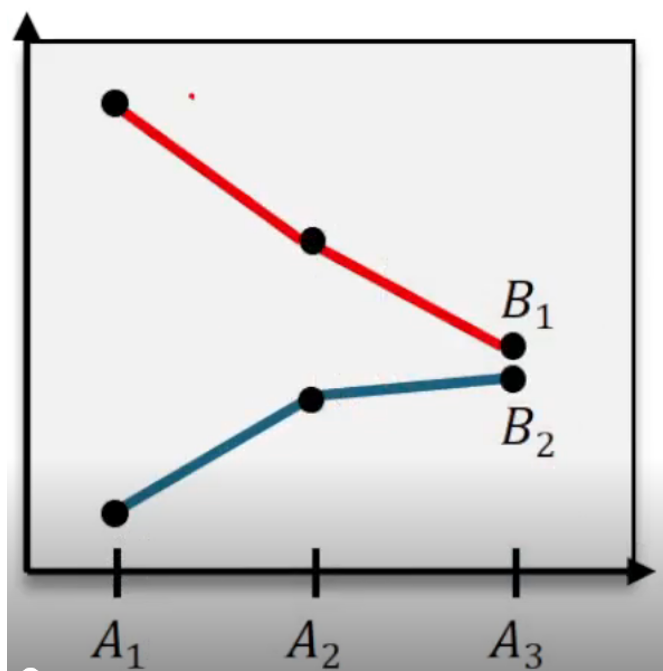

An interaction effect occurs when the effect of one factor on the dependent variable depends on the level of the other factor. For instance, if increasing fertilizer leads to a small increase in plant growth under low sunlight but a much larger increase under high sunlight, this indicates an interaction between fertilizer and sunlight. Detecting interaction is crucial because if a significant interaction exists, it complicates the interpretation of the individual effects of each factor.

The hypotheses for the interaction effect are set as follows: the null hypothesis (H₀) states that there is no interaction between the factors, while the alternative hypothesis (H₁) claims that an interaction exists. To test this, the F statistic is calculated as the ratio of the mean square due to interaction to the mean square due to error:

\[ F = \frac{\text{Mean Square Interaction}}{\text{Mean Square Error}} \]

The mean square error (MSE) represents the variability within groups and serves as the denominator in all F tests in ANOVA. If the p-value associated with the F statistic is less than the significance level (commonly α = 0.05), the null hypothesis is rejected, indicating a significant interaction effect. If the p-value is greater, the null hypothesis is not rejected, suggesting no strong evidence of interaction.

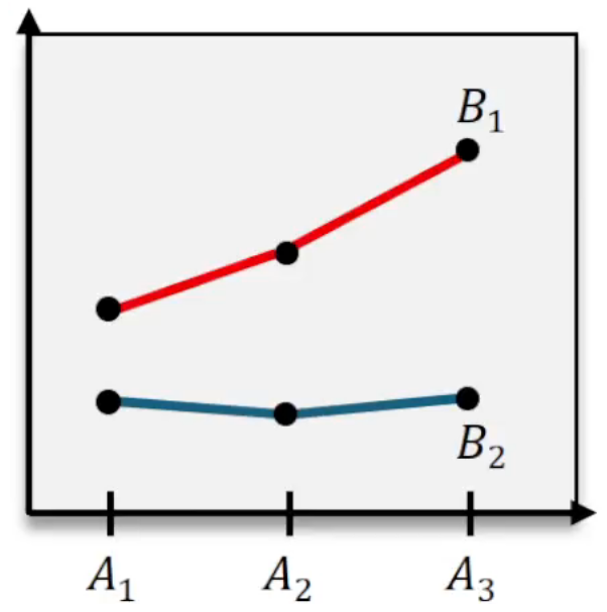

When no significant interaction is found, the analysis proceeds to test the main effects of each factor independently. Each factor's effect is tested by comparing its mean square to the mean square error, calculating an F statistic similarly:

\[ F = \frac{\text{Mean Square Factor}}{\text{Mean Square Error}} \]

For example, testing the effect of fertilizer level involves the null hypothesis that increasing fertilizer does not change plant growth, against the alternative that it does. A very small p-value (less than 0.05) leads to rejecting the null hypothesis, indicating that fertilizer level significantly affects plant growth. The same approach applies to sunlight exposure.

In summary, two-way ANOVA allows for a comprehensive analysis of how two factors and their potential interaction influence a dependent variable. The process begins by testing for interaction effects; if none are found, the main effects of each factor are evaluated separately. This method provides valuable insights into complex experimental designs where multiple factors simultaneously impact outcomes.